Emergency Department Optimization and Load Prediction in Hospitals

Authors: Karthik K. Padthe, Vikas Kumar, Carly M. Eckert MD MPH, Nicholas M. Mark MD, Anam Zahid, Muhammad Aurangzeb Ahmad, Ankur Teredesai

Table of contents

Abstract

Over the past several years, across the globe, there has been an increase in people seeking care in emergency departments (EDs). ED resources, including nurse staffing, are strained by such increases in patient volume. Accurate forecasting of incoming patient volume in emergency departments (ED) is crucial for efficient utilization and allocation of ED resources. Working with a suburban ED in the Pacific Northwest, we developed a tool powered by machine learning models, to forecast ED arrivals and ED patient volume to assist end-users, such as ED nurses, in resource allocation. In this paper, we discuss the results from our predictive models, the challenges, and the learnings from users’ experiences with the tool in active clinical deployment in a real world setting.

Introduction

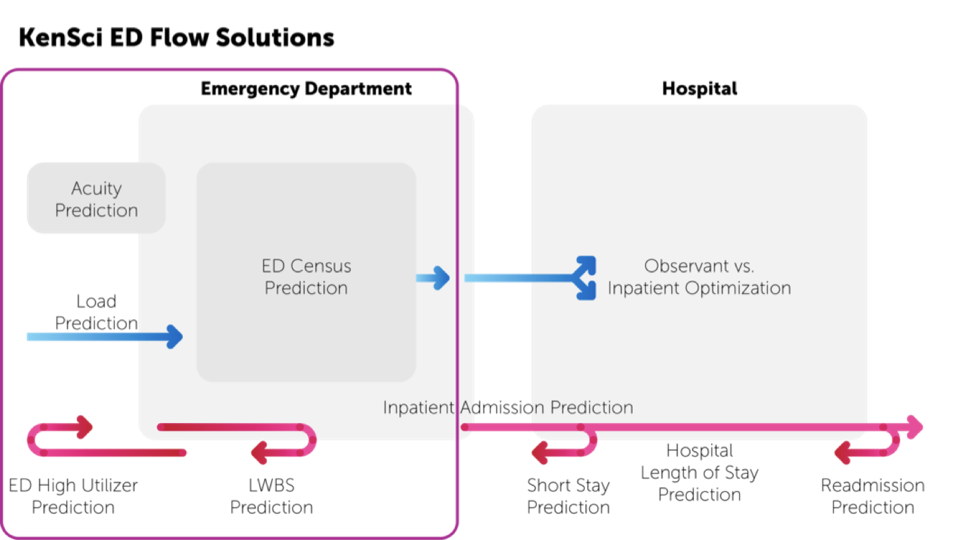

Emergency departments (EDs) are a critical component of the healthcare infrastructure and ED crowding is a global problem. In 2016 there were over 140 million ED visits in the US (Ahmad et al., 2018). The number of ED patients is growing and, according to US data, this increase has outpaced population growth for the last 20 years (Weiss et al., 2006). As a result, EDs are increasingly crowded (McCarthy et al., 2008) and ED overcrowding has been linked to decreased quality of care (Schull et al., 2003) (Hwang et al., 2006), increased costs (Bayley et al., 2005), and increased patient dissatisfaction (Jenkins et al., 1998). Using machine learning models to predict ED load could ameliorate the adverse effects of crowding, and multiple strategies have been proposed, including forecasting future crowding (Hoot et al., 2009), predicting the likelihood of inpatient admission (Peck et al., 2012), and predicting the likelihood that a patient will leave the ED without being seen (Pham et al., 2009). These solutions use a variety of administrative and patient level data to attempt to mitigate common ED bottlenecks, bottlenecks that uncorrected may lead to delays, inefficiencies, and even deaths (Carter et al., 2014). Multiple factors influence ED crowding including the number of new patients coming to the ED (arrivals), how severely sick or injured patients are (acuity), and the total number of patients in the ED (census). Each of these factors have both stochastic and deterministic components (missing reference) (Jones et al., 2008) and are influenced by both exogenous (e.g., vehicle crashes) and endogenous factors (e.g., hospital processes). In order to optimize ED flow, it is therefore necessary to integrate multiple predictions as shown in Figure [fig:edflow].

If ED load could be accurately predicted, staffing could be adjusted to optimize patient care. The ability to predict the number of patients seeking ED care on a given day is essential to optimizing nurse staffing (Batal et al., 2001). Currently, ED nurse staffing is assigned using heuristics and anecdotes such as higher census on Mondays, on days following federal holidays, and with other factors such as changes in weather, traffic, and local sporting events. Inaccurate prediction can lead to inappropriate nurse to patient ratios which can lead to dangerous under-staffing, poor clinical outcomes, nursing dissatisfaction, and burnout (Aiken et al., 2002). Matching staffing levels to the variation in daily patient demand can improve the quality of care and lead to cost savings.

[t]  [fig:edflow]

[fig:edflow]

Related Work

In this paper, we present our work with a busy suburban ED in the Pacific Northwest that services a rapidly growing metropolitan area. We describe the development of novel models to predict ED arrivals and census, the design of an easily consumable dashboard integrated into the clinical workflow, and deployment of the dashboard using a live data feed. The current work also addresses a gap in the literature where there is a dearth of published work related to ED optimization in a real world setting and in production.

The availability of accessible data and computational resources has enabled the application of machine learning (ML) to healthcare at an unprecedented scale (Krumholz, 2014). While several research groups have developed ML predictions on retrospective and static ED data, operationalized ML solutions in the ED are rare. Chase et al. developed a novel indicator of a busy ED: a care utilization ratio (Chase et al., 2012). The authors report that the prediction of this ratio, which incorporates new ED arrivals, number of patients triaged, and physician capacity, provides a robust indicator of ED crowding. McCarthy et al. utilized a Poisson regression model to predict demand for ED services (McCarthy et al., 2008). They determined that after accounting for temporal, weather, and patient-related factors (hour of day is most important), ED arrivals during one hour had little to no association with the number of ED arrivals the following hour. Jones et al. (Jones et al., 2008) explored seasonal autoregressive integrated moving average (SARIMA), time series regression, exponential smoothing, and artificial neural network models to forecast daily patient volumes and also identified seasonal and weekly patterns in ED utilization.

ED Predictions

The goal of our work was to optimize ED operations by accurately predicting ED arrivals and ED patient census to facilitate staffing optimization to better manage the influxes and patterns of ED patients to provide safe and timely care. Here we describe our approach to building the prediction models and we describe the metrics we used to evaluate the model accuracy.

Problem Description

There are two distinct yet related ED load optimization problems that we address in this work, as described below:

ED Census

ED census is defined as the total number of patients in the ED at a specified time. ED census includes patients in the waiting room, in triage, those receiving care, and those awaiting ED disposition: hospital admission, discharge, or transfer. ED census is a “snapshot” of ED utilization and includes elements related to ED arrivals as well as ED throughput. Predicting ED census can serve to inform both short-term (minutes to hours) operations, such as reassigning staff or diverting ambulance arrivals and longer-term (hours or longer) administrative decisions, such as calling in additional staff or sending staff members home early. We formulated this problem as a prediction of ED census at $t+2 ~hours$, $t+4~hours$, and $t+8~hours$, where $t$ is the prediction time. In production, these predictions are made every $15~minutes$, resulting in near real-time predictions. For instance, at 3:15 PM ($t$), we predict census for 5:15 PM ($t+2~hours$), 7:15 PM ($t+4~hours$), and 11:15 PM ($t+8~hours$). Then at 3:30 PM ($t$), we predict 5:30 PM ($t+2~hours$), 7:30 PM ($t+4~hours$), and 11:30 PM ($t+8~hours$).

[t]

[tbl:features]

| Feature | Description |

|---|---|

| Prior Census/Arrival | 4 features; census/arrival at 4 time events (at 15 min intervals) prior to prediction time, i.e. 15 min, 30 min, 45 min, 60 min. |

| Month of year | January - December (12 features) |

| Hour of day | Hour of the day (24 features) |

| Day of Week | Day of the week (7 features) |

| Quarter of Year | Season: Q1 Winter, Q2 Spring, Q3 Summer, Q4 Autumn |

| Weekend Flag | Flag if prediction on Saturday or Sunday |

| Evening Flag | Flag if prediction time between 20:00 and 08:00 |

| Slope census/arrivals | Slope of change from prior census or arrival |

ED Arrivals and Acuity

ED arrivals reflect the number of individual patients who are arriving at the ED over a period of time. Arrivals can be described by the acuity level of the individual patient, an indicator of illness or injury severity assessed by nursing staff at the time of patient triage (Gilboy et al., 2012). Predictions of patient volume by acuity level can further inform staffing needs - higher acuity patients tend to have greater intensity of staff and resource needs. Similar to the Census prediction, we framed the Arrivals prediction by acuity for 2, 4, and 8 hour forecasting. To accommodate different patterns in the acuity of patients, we built models for each individual acuity level.

Methods

For both Census and Arrivals we include temporal features such as hour of day, day of week, month of year, and quarter of year. To include the unique variations in census and arrival patterns in the evening compared to the morning as well as weekend versus weekday patterns, we included corresponding binary variables.

While ED census or ED arrival may be independent from one hour to the next, we use the current ED census trend to inform future ED census. To include signals for the current census trends in ED in our predictive models, we determine the slope from the census values in the previous 1 hour for every 15 minute intervals. In addition, we weighted values from these 15 minute intervals to that more recent values had higher weights. The census at $t-15~minutes$, $t-30~minutes$, $t-45~minutes$, and $t-60~minutes$ is weighted with 2, 0.5, 0.25, and 0.05 respectively. The weights were chosen empirically based on the performance metrics of the model. Similar to Census, the arrivals for the Arrival prediction are weighted in the same way. The final set of features is shown in Table [tbl:features].

[t]

[tbl:data]

| Acuity | ESI | Number of Encounters |

|---|---|---|

| Emergent | 1 | 1,435 |

| 2 | 46,436 | |

| Urgent | 3 | 116,808 |

| Non-Urgent | 4 | 33,023 |

| 5 | 2,315 | |

| Total | 199,957 |

Dataset Description

The data for the experiments came from a suburban level three trauma center at a hospital in the Pacific Northwest with $>60,000$ annual ED visits. The ED comprises multiple treatment spaces including 40 acute treatment rooms and 4 trauma rooms for the resuscitation of critically ill patients. Individuals are registered at the time of entry to the ED and all registered ED patients were included in this analysis. ED encounters occurring between January 2014 through January 2018 were included in the experiments. The dataset included electronic health record (EHR) data elements such as time, date, location, chief complaint, acuity score, vital signs, and others. This included $205,929$ ED encounters, of which $199,957$ encounters documented patient acuity. ESI is a categorical variable representing patient acuity (based on vital signs and symptoms) where ESI 1 connotes highest urgency and ESI 5 the lowest urgency (Gilboy et al., 2012). We grouped these into three categories reflecting emergent (ESI 1 or 2), urgent (ESI 3), and non urgent (ESI 4 or 5). The distribution of of the encounters split by ESI groups is shown in Table [tbl:data].

Models

Multiple regression models were evaluated for both Census and Arrivals predictions. We choose to use a Generalized Linear Model with Poisson Regression (GLM) for its simplicity and capability to model count data (Gardner et al., 1995). We included regularization variants of GLM that include Lasso, Ridge, and Elastic Net for validation. We also included linear Gradient Boosting Machine (GBM) due to its robustness to missing data and predictive power (Friedman, 2001). We used the average arrivals and census values at that same time point from the prior two years as our baseline. We used scikit-learn package available in Python 3.6 to implement all models.

Evaluation metrics

We evaluate the performance of our models using root mean squared error (RMSE) and mean absolute error (MAE) (Verbiest et al., 2014) which are suitable metrics for regression. However, the real utility of ED load prediction is in staffing optimization. Most common mid-size US ED departments have an ED patient to nurse ratio of 4:1. Based on this, we devised an additional metric: we determined the percentage of times the model prediction is within a threshold of ± 4 (Absolute Error <=4). Furthermore, we also calculate the percentage of times that the model is accurate to within $70\%$ of the actual value (Accuracy$>$70%). These additional metrics frame the models performances in terms of their effects on user workflows and provide a simple understanding of the model performance under the system constraints while ensuring interpretability to end users.

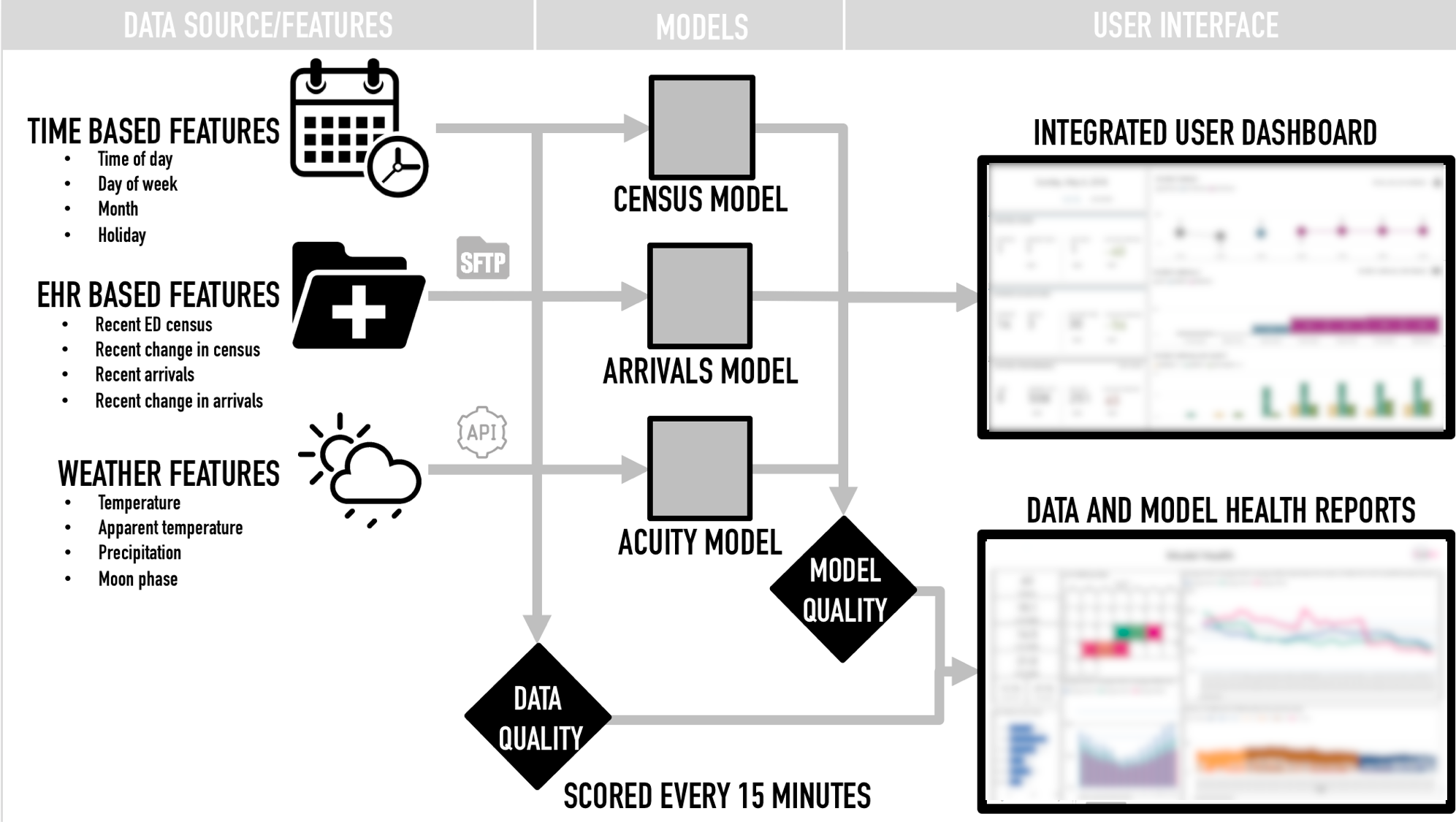

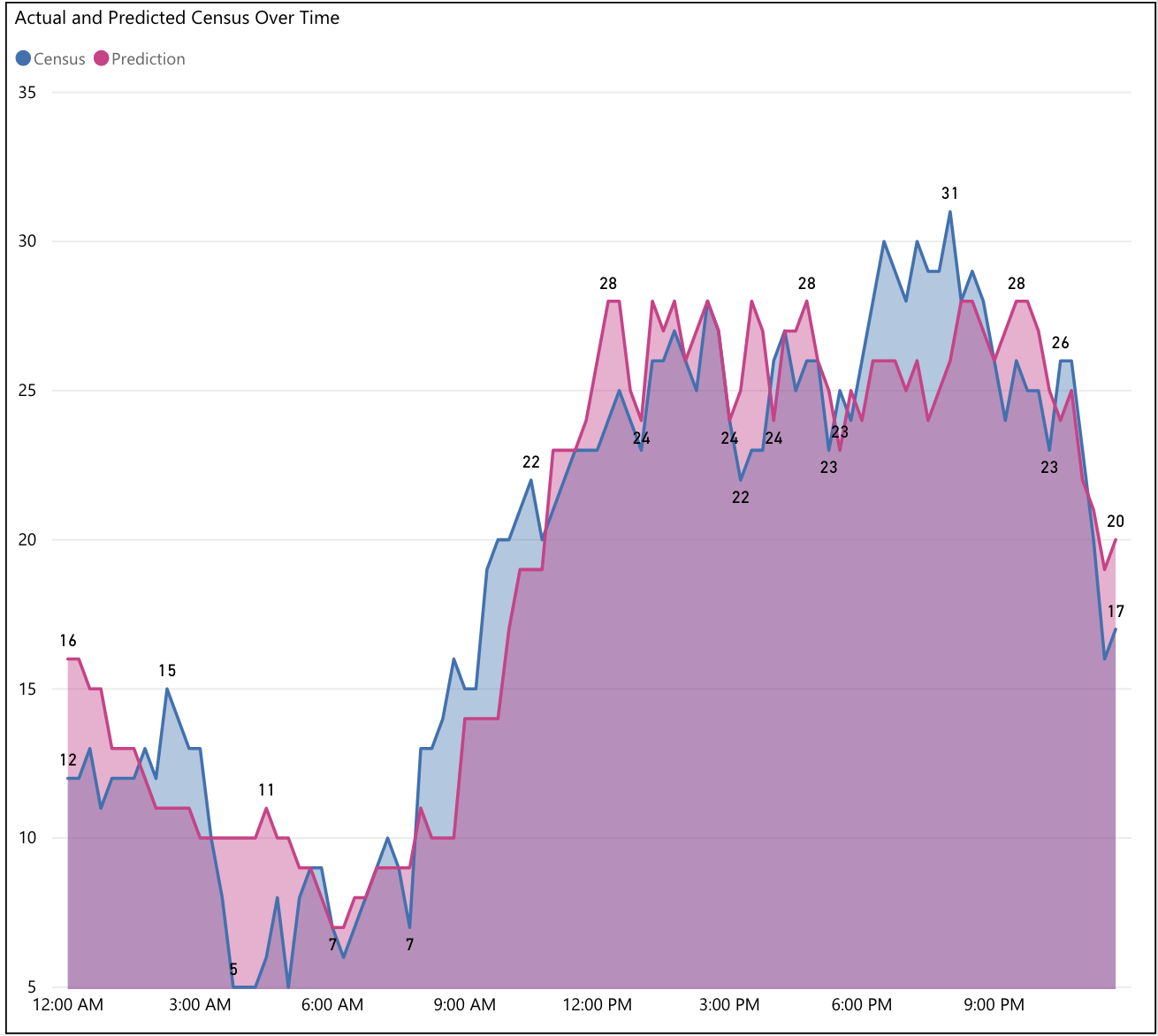

Furthermore, combining these models with a model management process to detect changes in model performance or shifts in underlying patient distributions, prevails as novel work. Model management is an iterative process that includes monitoring and evaluating model performance to detect subtle (or unsubtle) changes in the underlying distribution of the data, permitting investigation and, if necessary, model re-training. We have implemented a workflow for automatic model monitoring; the overview of this is represented in Figure [fig:boat1]. As part of this workflow we created a user friendly dashboard to track the model performance and distributions, an example visual can be seen in Figure [fig:boat2].

[t]  [fig:boat1]

[fig:boat1]

Results

Data from January 2014 to October 2017 was used to train the models and data from November 2017 to January 2018 was used to test the models. The performance metrics of the census models for 2 hour prediction are shown in Table [tbl:results]. The Gradient Boosting Method (GBM) performed the better among the set for all metrics which we believe is due to its robustness to the sparsity in the data. The 4 and 8 hours GBM census model MAEs are 4.0739 and 4.2960 respectively. The metric (Accuracy $>$ 70%) shows that GBM is accurate 81.52% of times for a prediction within 70% of actual census. And, the GBM is accurate 72.90% time for a prediction within a value of ±4 of actual census.

[] [tbl:results~a~rrival]

| Acuity | Time Window | Model | RMSE | MAE | Absolute Error < 4 | Accuracy > 70% |

|---|---|---|---|---|---|---|

| Emergent | 2 hour | GLM | 1.9747 | 1.4267 | 96.33 | 32.80 |

| GLM Lasso | 31.96 | 2.1492 | 1.5400 | 94.96 | ||

| GLM Ridge | 2.0039 | 1.4451 | 96.05 | 32.45 | ||

| GLM Elastic Net | ||||||

| GBM | 1.9768 | 1.4283 | 96.38 | 32.97 | ||

| Baseline | 2.0278 | 1.5174 | 96.59 | 21.63 | ||

| 4 hour | ||||||

| 8 hour | ||||||

| Urgent | 2 hour | |||||

| 4 hour | ||||||

| 8 hour | ||||||

| Non-Urgent | 2 hour | |||||

| 4 hour | ||||||

| 8 hour | ||||||

[t]  [fig:boat2]

[fig:boat2]

For arrival models, we built 9 models, one for each acuity level and for each 2, 4 and 8 hours prediction. We observed that the gradient boosting model performed better than other models and the baseline for Emergent acuity encounters, where as for Urgent, Non-urgent acuity GLM models performed better. The results are shown in Table [tbl:results~a~rrival]. The absolute error and accuracy were only available for a subset of models. We observe that the MAE and RMSE for all models across different levels of acuity is similar if we consider 2 hour and 4 hour windows. However, the performance goes down if we consider 8 hour windows. This is not unexpected since trends can greatly vary across longer time spans e.g., compare ED trends at 2 am vs. 10 am.

[] [tbl:results]

| Time window | Model | RMSE | MAE | Absolute Error < 4 | Accuracy > 70 % |

|---|---|---|---|---|---|

| 2 hour | GLM | 4.3343 | 3.3812 | 71.80 | 80.42 |

| GLM-Lasso | 4.6816 | 3.6642 | 68.41 | 77.83 | |

| GLM-Ridge | 4.5305 | 3.4975 | 70.79 | 72.80 | |

| GLM-Elastic Net | 4.6550 | 3.6395 | 69.45 | 78.47 | |

| GBM | 4.2013 | 3.2790 | 72.90 | 81.52 | |

| Baseline | 6.9026 | 5.3926 | 51.19 | 60.19 | |

| 4 hour | GLM(4 hour) | 5.1491 | 4.0173 | 64.66 | 74.95 |

| GLM-Lasso(4 hour) | 6.0410 | 4.6643 | 56.92 | 68.78 | |

| GLM-Ridge(4 hour) | 5.2855 | 4.1111 | 63.85 | 74.44 | |

| GLM-Elastic Net(4 hour) | 6.1962 | 4.7468 | 57.32 | 67.59 | |

| GBM(4 hour) | 5.1784 | 4.0241 | 64.35 | 75.05 | |

| 8 hour | GLM(8 hour) | 5.5026 | 4.2960 | 61.42 | 72.51 |

| GLM-Lasso(8 hour) | 6.1726 | 4.8158 | 55.62 | 67.26 | |

| GLM-Ridge(8 hour) | 5.5829 | 4.3632 | 60.68 | 72.25 | |

| GLM-Elastic Net(8 hour) | 6.6123 | 5.1085 | 53.79 | 64.54 | |

| GBM(8 hour) | 5.6013 | 4.3693 | 60.50 | 71.99 |

ED Experience

A key differentiator of the work that we present here is that our

prediction models were fully operationalized into the clinical workflow,

that of the ED charge nurse. Through collaborative design and planning

sessions with ED nurses and other health system stakeholders, we

developed an ED dashboard to surface the results of our predictions.

Prediction based tools are often beset by difficulties in end-user

understanding of probability based results (Jeffery et al., 2017).

Part of the solution to this problem is the early incorporation of

end-user feedback and open discussions around tool utility.

Our dashboard was deployed for 6 months as part of pilot in a large

suburban ED. As part of this pilot, data quality was monitored

continuously and multiple ML models were scored at 15 minute intervals.

End-user training was conducted during the pilot period. During this

period charge nurses completed forms at the conclusion of each shift

documenting their use of the dashboard and any actions the dashboard

prompted (such as calling in additional staff for projected high load or

sending staff home early for projected low load). In addition to the

potential impact on nurse staffing, accurately forecasting ED arrivals

and census may optimize care delivery in other ways - such as reducing

waiting times, ED length of stay, and rates of patients leaving without

being seen. These additional key performance indicators (KPIs) were also

be evaluated to determine the clinical utility of the deployed

predictions. The iterative nature of this approach speaks to the

engagement needs of the clinical end-users and the imperative of

operationalizing machine learning in healthcare. While accurate

predictions are key to implementation success and end-user adoption,

simple metrics such as prevalence of accuracy above a threshold

(Accuracy $>$ 70%) will help health system stakeholders evaluate the

impact and maintenance cost over a period of time.

Discussion

Our work demonstrates that subtle patterns in exogenous and endogenous variability in patient flow can be utilized to predict, with high accuracy, ED patient arrivals and census. Deployment of ML-based predictive models into a complex clinical workflow is challenging. However, predicting ED census is an ideal ML healthcare problem to study for several reasons. First, predicting ED census every 15 minutes across 12 different models allows for $1,152$ predictions daily. Each prediction is clearly falsifiable with a measurable outcome (the actual number of arrivals and patient census), and the follow-up interval is short (e.g., one must only wait 8 hours to determine the accuracy of all predictions). Second, many healthcare ML models are degraded by data censoring; for example, when predicting 30-day hospital readmissions, patients may avoid readmission, they may be readmitted at another facility. Additionally, according to the work of Jeffery and colleagues, prediction based tools are most useful when prompt decision and action are warranted by the end-users (Jeffery et al., 2017), however in some cases, such as predicting hospital readmissions, the action of the clinician can alter the outcome, thus making the prediction appear erroneous. In predicting ED load, there are no actions that the users can take (other than the ED going on diversion status, which is done only seldom) that will alter the number of arrivals or census. The large number of predictions, the short follow-up interval, and the availability of ’perfect information’ about outcomes (akin to ’perfect information’ games like chess) makes ED load prediction an ideal place to optimize model management processes.

We are continuing to improve the performance and clinical utility of these models by integrating additional data sources into our predictions. These sources can include events or include: local weather data, local sporting events, local traffic, local emergency medical services (EMS) activity, and Google Trends searches. We plan to further improve this solution by providing interpretability for the predictions to help ED staff make informed decisions (Ahmad et al., 2018).

References

- Ahmad, M. A., Eckert, C., & Teredesai, A. (2018). Interpretable machine learning in healthcare. Proceedings of the 2018 ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, 559–560.Details

- Jeffery, A. D., Novak, L. L., Kennedy, B., Dietrich, M. S., & Mion, L. C. (2017). Participatory design of probability-based decision support tools for in-hospital nurses. Journal of the American Medical Informatics Association, 24(6), 1102–1110.Details

- Verbiest, N., Vermeulen, K., & Teredesai, A. (2014). Evaluation of classification methods. Data Classification: Algorithms and Applications.Details

- Carter, E. J., Pouch, S. M., & Larson, E. L. (2014). The relationship between emergency department crowding and patient outcomes: a systematic review. Journal of Nursing Scholarship, 46(2), 106–115.Details

- Krumholz, H. M. (2014). Big Data And New Knowledge In Medicine: The Thinking, Training, And Tools Needed For A Learning Health System. Health Affairs, 33(7), 1163–1170. https://doi.org/10.1377/hlthaff.2014.0053Details

- Chase, V. J., Cohn, A. E. M., Peterson, T. A., & Lavieri, M. S. (2012). Predicting emergency department volume using forecasting methods to create a “surge response” for noncrisis events. Academic Emergency Medicine, 19(5), 569–576.Details

- Gilboy, N., Tanabe, P., Travers, D., Rosenau, A. M., & others. (2012). Emergency Severity Index (ESI): a triage tool for emergency department care, version 4. Implementation Handbook, 12–0014.Details

- Peck, J. S., Benneyan, J. C., Nightingale, D. J., & Gaehde, S. A. (2012). Predicting Emergency Department Inpatient Admissions to Improve Same-day Patient Flow: PREDICTING ED INPATIENT ADMISSIONS. Academic Emergency Medicine, 19(9), E1045–E1054. https://doi.org/10.1111/j.1553-2712.2012.01435.xDetails

- Žliobaitė, I. (2010). Learning under concept drift: an overview. ArXiv Preprint ArXiv:1010.4784.Details

- Pham, J. C., Ho, G. K., Hill, P. M., McCarthy, M. L., & Pronovost, P. J. (2009). National study of patient, visit, and hospital characteristics associated with leaving an emergency department without being seen: predicting LWBS. Academic Emergency Medicine, 16(10), 949–955.Details

- NCHS. (2009). National hospital Ambulatory Medical Care Survey: 2015 emergency department summary tables. https://www.cdc.gov/nchs/data/nhamcs/web_tables/2015_ed_web_tables.pdfDetails

- Hoot, N. R., LeBlanc, L. J., Jones, I., Levin, S. R., Zhou, C., Gadd, C. S., & Aronsky, D. (2009). Forecasting Emergency Department Crowding: A Prospective, Real-time Evaluation. Journal of the American Medical Informatics Association, 16(3), 338–345. https://doi.org/10.1197/jamia.M2772Details

- Jones, S. S., Evans, R. S., Allen, T. L., Thomas, A., Haug, P. J., Welch, S. J., & Snow, G. L. (2009). A multivariate time series approach to modeling and forecasting demand in the emergency department. Journal of Biomedical Informatics, 42(1), 123–139.Details

- Bernstein, S. L., Aronsky, D., Duseja, R., Epstein, S., Handel, D., Hwang, U., McCarthy, M., John McConnell, K., Pines, J. M., Rathlev, N., & others. (2009). The effect of emergency department crowding on clinically oriented outcomes. Academic Emergency Medicine, 16(1), 1–10.Details

- Jones, S. S., Thomas, A., Evans, R. S., Welch, S. J., Haug, P. J., & Snow, G. L. (2008). Forecasting daily patient volumes in the emergency department. Academic Emergency Medicine, 15(2), 159–170.Details

- McCarthy, M. L., Zeger, S. L., Ding, R., Aronsky, D., Hoot, N. R., & Kelen, G. D. (2008). The challenge of predicting demand for emergency department services. Academic Emergency Medicine, 15(4), 337–346.Details

- Flottemesch, T. J., Gordon, B. D., & Jones, S. S. (2007). Advanced statistics: developing a formal model of emergency department census and defining operational efficiency. Academic Emergency Medicine, 14(9), 799–809.Details

- Weiss, A. J., Wier, L. M., Stocks, C., & Blanchard, J. (2006). Overview of emergency department visits in the United States, 2011: statistical brief 174.Details

- Hwang, U., Richardson, L. D., Sonuyi, T. O., & Morrison, R. S. (2006). The effect of emergency department crowding on the management of pain in older adults with hip fracture. Journal of the American Geriatrics Society, 54(2), 270–275.Details

- Bayley, M. D., Schwartz, J. S., Shofer, F. S., Weiner, M., Sites, F. D., Traber, K. B., & Hollander, J. E. (2005). The financial burden of emergency department congestion and hospital crowding for chest pain patients awaiting admission. Annals of Emergency Medicine, 45(2), 110–117.Details

- Schull, M. J., Morrison, L. J., Vermeulen, M., & Redelmeier, D. A. (2003). Emergency department overcrowding and ambulance transport delays for patients with chest pain. Canadian Medical Association Journal, 168(3), 277–283.Details

- Aiken, L. H., Clarke, S. P., Sloane, D. M., Sochalski, J., & Silber, J. H. (2002). Hospital nurse staffing and patient mortality, nurse burnout, and job dissatisfaction. Jama, 288(16), 1987–1993.Details

- Friedman, J. H. (2001). Greedy function approximation: a gradient boosting machine. Annals of Statistics, 1189–1232.Details

- Batal, H., Tench, J., McMillan, S., Adams, J., & Mehler, P. S. (2001). Predicting patient visits to an urgent care clinic using calendar variables. Academic Emergency Medicine, 8(1), 48–53.Details

- Jenkins, M. G., Rocke, L. G., McNicholl, B. P., & Hughes, D. M. (1998). Violence and verbal abuse against staff in accident and emergency departments: a survey of consultants in the UK and the Republic of Ireland. Emergency Medicine Journal, 15(4), 262–265.Details

- Gardner, W., Mulvey, E. P., & Shaw, E. C. (1995). Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models. Psychological Bulletin, 118(3), 392.Details